How to Fix Crawled Currently Not Indexed

If you check in your search console account, inside the pages section, you will see that the pages are divided into two categories - indexed pages and not indexed pages. The search console also provides the reasons why some of your pages have not been indexed and there can be various reasons behind it. There can be pages that are not indexed because they have been marked as not indexed. Other pages are not indexed because they redirect to other pages or they are 404s.

However, all of the pages that you submit in your xml sitemap do not necessarily get indexed. Some of the pages included in the sitemap might also not get indexed. If you are worried why the pages are not getting indexed, then there can be several reasons behind it. It does not mean that Googlebot does not crawl the urls that are not indexed yet.

What does crawled currently not indexed mean?

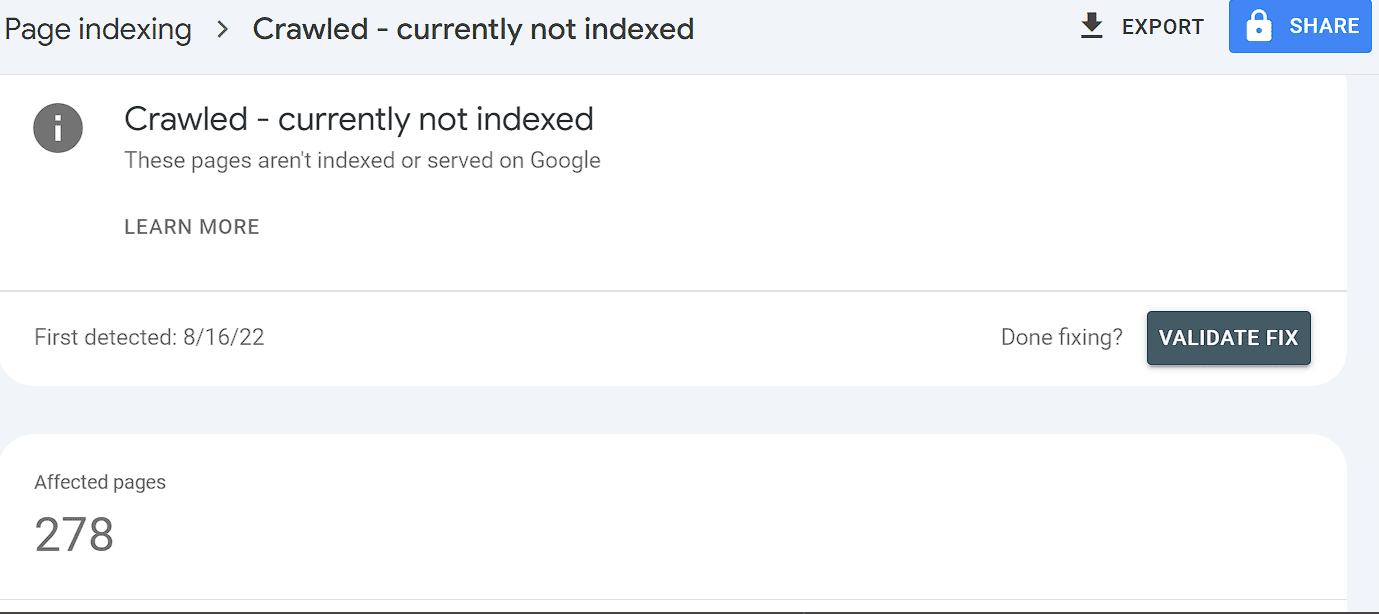

You might have come across the status - ‘crawled, currently not indexed’ - among the no indexed pages. These pages have been crawled but they could not make it to the Google Index. We will discuss the possible reasons that these urls have not been indexed. However, first let us tell you that all the pages bearing the status crawled-currently not indexed will not necessarily get indexed in the future.

.png)

It is so because this section might also include urls that were crawled by Google but are not included in your sitemap or urls that you do not want indexed. Some urls may also be included in your sitemap and are the ones that you want to be indexed.

First of all, this status means that these urls are being processed and have not been fully processed. In the future, some of these urls might end up being indexed and many might not. After further processing, a lot of these urls might end up as being ‘marked as no index’, or ‘pages with redirect’ or even ‘404 pages’. The urls that are under the crawled - currently not indexed category and are ‘index and follow’ or included in the sitemap also may or might not get indexed.

At your end, what you can do is to increase your chances of the right urls getting indexed in the future.

How to fix crawled currently not indexed?

To fix crawled - currently not indexed, you must first review the urls included in this section.

You should not be too worried since in most cases this does not signify an error or major quality issue but just that Google is informing you that these urls have not been fully processed yet. It is perfectly normal to find some of the indexable urls also under this category and rest must be urls you do not want indexed which will be processed in future but not get indexed.

However, if you see a significant number of urls that are indexable or included in your sitemap and you want them indexed quicker, then there are a few steps you can take to fix the issue.

An important thing to note is that a page has been marked as crawled - currently not indexed does not always mean the url is not on Google. In a large number of cases it is just a reporting discrepancy. It means that Google has indexed the url. However, to report its status as indexed might still take some time. How to verify if such a url has been indexed. Use the url inspection tool to verify. Click on the url and then click on inspect url. If the status on the next page is indexed, you can be certain it is no more than a reporting discrepancy.

The data inside the search console will refresh after some time and the urls will be reported as indexed. All you need to do is to verify using the url inspection tool. In case of the urls that have been marked as no index or which are other urls that you do not want indexed, you would not need to do anything.

If the url is indexable which means you want it indexed and have included it in your sitemap and the url inspection tool shows it as crawled - currently not indexed, then you can request Google to index the url by clicking on request indexing. You can individually check these urls and request their indexing if they are not already indexed according to the url inspection tool. Remember that the url inspection tool will provide you the latest status of individual urls and the reporting in the search console can be slightly delayed.

If you see that there are a large number of indexable urls which are ending up as crawled and currently not indexed, you might also ‘validate fix’ after making appropriate changes. It will mean Google can again crawl these urls and index the ones that are index and follow.

However, it is important to focus on the reasons that are keeping these urls from getting indexed. You must apply the fixes and then validate the fix so that Google will crawl again and index the right urls.

What can be the reasons that some urls are not being indexed by Google? Does Google consider these urls as unimportant or are they duplicate content or is there some other reason? Is there a quality issue with these pages or is it because there is some technical issue preventing their indexation?

If you have previously tried fixing and then validated the fix in Google Search Console but were not able to generate positive results, then the real issue might be lying somewhere else. If most of the pages under this status - crawled currently not indexed - are archive pages or feed pages, you must ignore the warning since these pages are most likely no index. If you see broken urls or 404 urls, you can fix them by redirecting them to valid and relevant pages.

However, if your previous efforts have not yielded any results and a large number of pages under crawled - currently not indexed are the valid pages requiring indexation, it is an indication that it could be a sitewide quality issue requiring improvement.

As a first step, make a list of the indexable urls that have been crawled and currently not indexed. These are the urls you need to focus upon and you can safely ignore the rest. Check their status using the url inspection tool. If these pages are indexed then you can stop here.

If they are not indexed and the inspection tool also shows the same status or not indexed, you must fix any quality issues on your website before moving ahead and making a request to validate the fix. Unless you fix the quality issues, the status of these urls might remain unchanged despite your requests for validation,

Is your website new? If your website is new and has low domain authority, then it is going to take Google some time to index these urls. You should focus on creating more content and acquiring backlinks in the meantime. It does not necessarily mean that there is a quality issue with your website but that it is a new website and needs to build trust and achieve more backlinks before all of its content gets indexed. You must also review your content since good quality content gets indexed faster.

If it is an old website and still you are seeing a significant number of urls like more than 200 urls being crawled and not indexed, you might move ahead to apply the fixes.

First, you will need to review the quality of content on these pages. Do most of these pages have thin content? Compared to the other pages, do these pages have less content or low quality content. Try to increase the length of content on these pages and improve the quality of content. While this will not have an instant impact because quality improvement in blogging is an ongoing process and you must continuously focus on addressing issues that hurt quality or user experience, Both these factors have become significant in terms of rankings.

Conduct a SEO Audit of your Website

Conduct a detailed site audit and fix all the SEO and technical issues you can find on your site:

- Fix canonical urls - Fix redirects - Redirect 404 errors - Improve content quality - Improve website speed and core web vitals - Fix duplicate content and thin content - Improve backlink profile - Improve internal linking structure

All the above steps are crucial in terms of improving site quality. However, the technical aspects like website speed and core web vitals are also crucial in terms of SEO and user experience.

Check if there are enough internal pages linking to the content that has been crawled but not indexed yet. Focus on internal linking and improve your internal linking structure. Add internal links to pages that have not been indexed. Pages that have been linked to more often from other pages on your website will rank higher since Google considers them higher value pages.

Use a tool like Semrush to check for SEO issues and canonical related errors. Run a Semrush audit and fix the highlighted issues.

Remove low quality pages from your website systematically and redirect them to relevant high quality content. If there is such content on your website that you consider outdated or low quality, consider removing it from our website and replacing it with better higher quality content. Otherwise, rewrite the content to improve its quality and let Google crawl it.

That’s it. Improving content quality will have a strong impact on your overall rankings. Even if it takes Google some time, you will see your rankings and indexation status improving in weeks or a few months. Do not forget that user experience also affects indexation status and rankings. Make your website easy to navigate and optimize it for faster loading times.

Google’s latest core update in March 2024, targets low quality, spammy and AI generated content. So, you must try to remove any such content from your website to avoid a sitewide penalty.

Use instant indexation for getting content indexed faster. If you want to get your content indexed faster, you can also use a SEO plugin like AIOSEO, Rank Math or Yoast Pro to get urls indexed in bulk.

Remember that in most cases, crawled - currently not indexed does signify any error. You might discover that the urls are actually indexed when you use the url inspection tool. Otherwise, you can try the fixes detailed above to improve url indexation and site rankings.

Suggested Reading