Discovered Currently Not Indexed

How to deal with discovered, currently not indexed urls in GSC

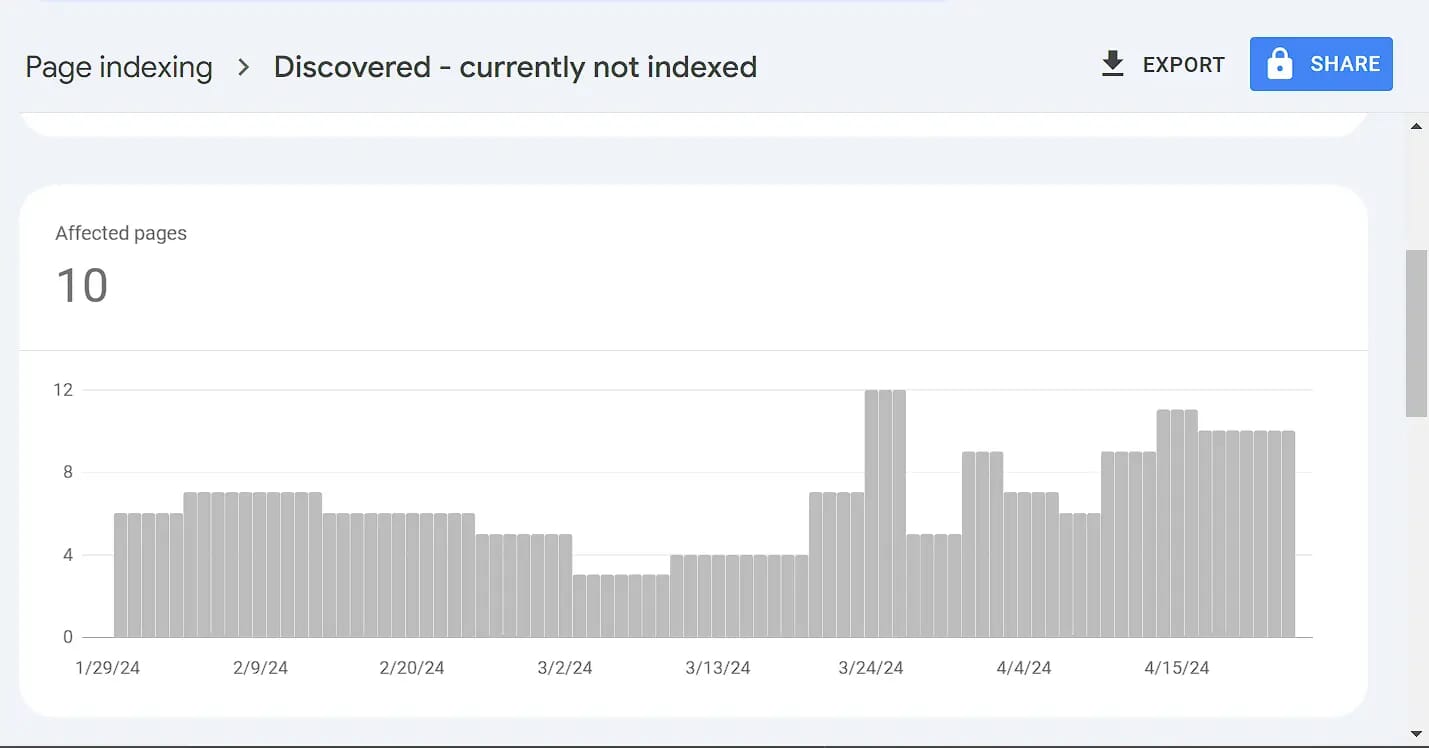

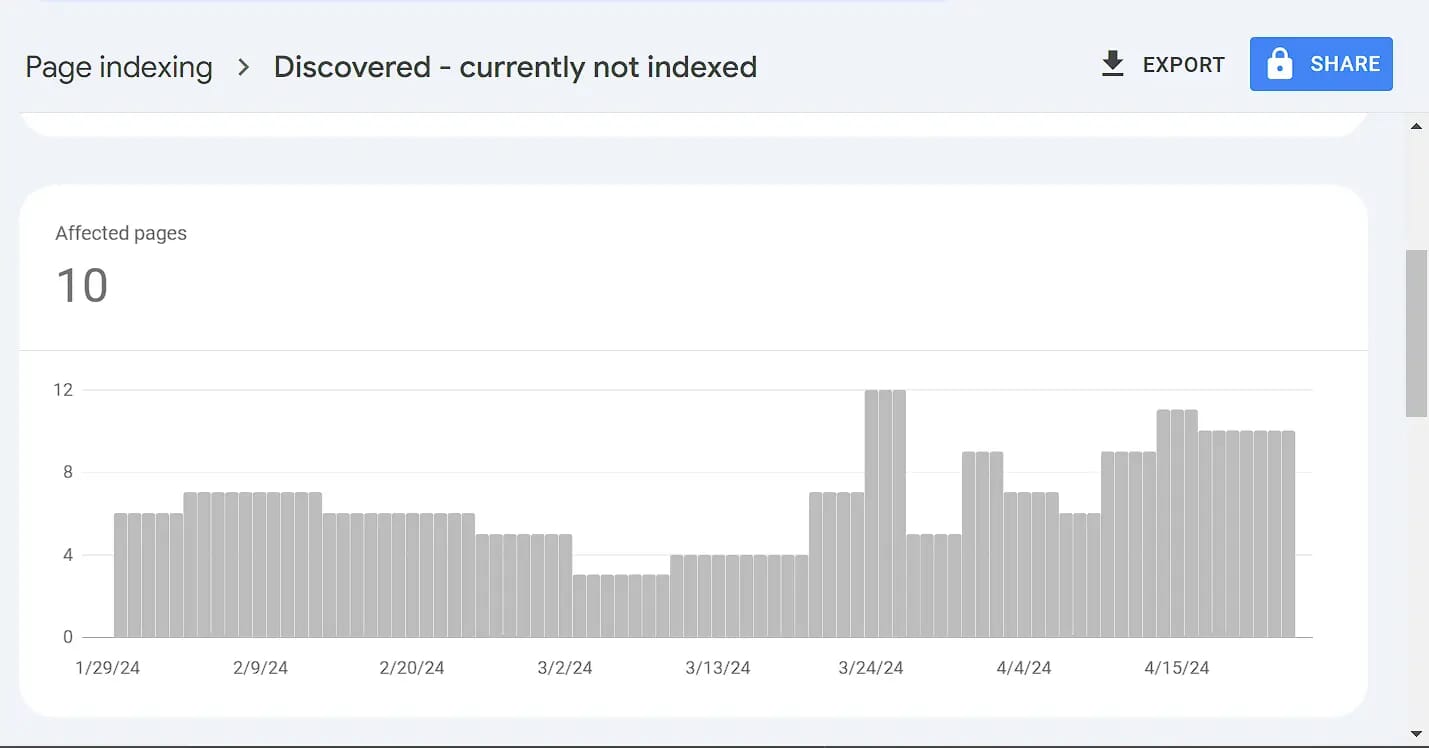

When you look inside your Google Search Console data, you might come across several scenarios. Some urls may be indexed and several might not be indexed. Indexation issues are common across websites and particularly low DR or new websites, face more of such issues.

Every website/blog owner wants site urls to get quickly indexed so that they can appear in the search results. The urls that have not been indexed cannot appear in searches. One of the common scenarios that webmasters face is that a url has been discovered and not indexed. What happens in such cases is that for weeks and weeks you keep coming back to the search console to see that the number of urls that have been discovered but not indexed remains the same. You want Google to process these urls., but they are not getting processes. Google is taking longer than expected to process these urls and it is normal to feel frustrated over it.

In this post, we are going to describe the issue and provide a resolution regarding how webmasters can fix the “discovered, currently not indexed error”.

Rather than being an error, it is more of a status. It shows that Google is crawling your website less often and that there are several such urls that it has not processed and there can be different reasons behind it which can be fixed.

If you think it is a bad thing then it is not. It is quite common to see such urls in your search console and as we already mentioned, it is generally not a bad indication. Moreover, all the urls that you see under this status are not going to be indexed and neither do you want them all indexed. However, if you see a large number of such urls that should’ve been indexed but have been marked as discovered not indexed yet, you might need to take action.

On February 18, John Mueller of Google answered this question for a user.

User: We have many pages that are discovered but not indexed. How long can we expect this to last?

John Mueller: That can be forever. It’s something where we just do not crawl and index all pages. It’s completely normal for any website that we do not have everything (on the website) indexed. Especially with a newer website having a lot of content, it is quite expected that a lot of the content will be discovered and not indexed for a while and then over time usually it kind of shifts over.

John Mueller suggested that one should continue working over the website since Google’s focus rather than individual pages is on the website itself. One should make sure that Google’s system recognizes that there is value in crawling and indexing more. And then Google will crawl and index more.

So, that’s where your answer lies. It is a question of value and whether Google sees value in indexing more of the content or not. Content will get indexed faster, if Google finds it valuable.

If you see a lot of such new content not getting indexed, then you need to check your content strategy and evaluate a few more things about your website because as we already stated that it is about value and rather than being about individual pages, it is about the entire website.

Conduct an Audit of your website

Use an online tool like Semrush to evaluate your website SEO. If you see any such errors that might hurt your website like missing canonicals or other similar issues, you must fix them instantly. Generally, using a good SEO plugin like Rank Math, Yoast, AIOSEO or Slim SEO helps you fix the schema and canonical url related issues. If you are using Wordpress, a dedicated SEO plugin can fix most of such issues. Make sure you have submitted your xml sitemap to the search console. Check if your xml sitemap is loading without any issue. If there is any problem with the sitemap or if it is not loading as it must, fix that first. You must also check your website for broken links and 404 errors and fix them.

Check the robots.txt file

Your robots.txt file has an important role in terms of website SEO and indexation. Any errors in the robots.txt file can multiply your problems. Make sure you are not preventing Google from crawling your website through your robots.txt file. You can use the Google search console’s robots.txt tester to see if there is any problem that might be preventing Googlebot from crawling the website. If Google is crawling any such urls that it must not crawl, then you can prevent their crawling and wastage of your crawl budget using the robots.txt file.

Check out all urls that are discovered, not indexed yet

Take a look at all the urls that have been discovered and not indexed yet. Some of them might be urls like tag or archive urls that are ultimately not going to be indexed. You will not need to worry about these urls since after all you do not want them indexed.

Focus on the urls that you want indexed and make a list. If the number of such urls that are indexable which means they are included in the sitemap and you want them indexed. Unless the number of such urls is significant there is nothing to worry about. Even if their number is high, you can still fix them and sooner or later, Google will have indexed them.

Evaluate the useful pages and take a look at their content and other on page SEO elements. Fix anything that you believe is worth fixing. Inspect these urls using the url inspection tool and Google will tell you where it discovered those urls. You can also request indexing using the url inspection tool. However, whether it indexes the url or not is up to Google. You can still increase your chances by taking the following steps.

Check your internal linking structure and content quality

A poor internal linking structure will not yield favorable results in terms of SEO. So, make sure you have an internal linking strategy in place and that your internal linking structure is robust. Improve your internal linking structure to make sure that your content gets indexed faster. Any page that has been linked to internally from a higher number of pages receives higher weightage and Google considers such pages more important. The chances of such pages getting indexed faster is higher.

Moreover, internal linking helps Google discover and index new content faster. If Google encounters a url repeatedly while crawling pages, it is more likely to crawl that url and index it. Internal linking improves crawlability and therefore the chances of urls getting indexed. However, since it is a question of value and whether Google considers the page valuable or not depends on the quality of the page content. High quality and useful content will get indexed for sure.

Check website loading times and improve user experience

Website speed and user experience are of great importance in terms of SEO and affect your indexation status and rankings in Google searches. Slow loading websites are crawled less often and faster loading websites can be crawled faster and more. In its guide to managing crawl budget, Google states that sites that load faster will be crawled faster and more. So, make sure that your site loads efficiently.

Your crawl limit can go up or down for a number of reasons including how fast your website responds. If a website responds faster, your crawl limit will go up which means it will be crawled more and more of your content will be indexed.

So, manage your website’s speed. If you are using Wordpress, you can use caching, object caching, compression and minification to speed up your website. It will help you improve your crawl limit and increase the chances of urls getting indexed.

Check for duplicate and thin content

Duplicate content and thin content pages have less chances of getting indexed. So, if you have a lot of duplicate content, spread across several pages on your website, you can consolidate them. Similarly, you can add more content to the pages suffering from thin content to increase their crawlability and chances of getting indexed.

Keep generating good quality and original content

At last, since it is a question of value, Google will index the content that it thinks provides value and is useful for visitors. After Google’s latest core update that rolled out in March 2024, thin and low quality or spammy content will get deindexed without any warning. So, make sure that you keep generating good quality and high value content. If your website is new with lots of content, you should also focus on backlinking and increasing domain ratings. If you have been generating good quality content, sooner or later, it is going to be indexed.

Conclusion:

Your content needs to be indexed to rank in searches and it must be useful and valuable to get indexed and rank higher. If you see a lot of urls that have been discovered by Google but not indexed yet, you must remain patient and wait. Meanwhile, do a SEO audit of your website, improve internal linking structure and improve content quality as well as reduce bounce rate to make sure that Google crawls your website more often. It is more of a sitewide issue than limited to a few pages. You should focus on checking if there is a site wide quality issue since a lot of content not getting indexed might be a sitewide quality issue. Fix duplicate and thin content pages,

As already explained, ‘discovered, currently not indexed’ is a status and not really an error or a problem for you. It will be sorted out in future if you can mind a few things. New websites must focus on generating high value content consistently and making sure that pages load faster.

You must be careful about all the points listed above to make sure your site is crawled and valid content gets indexed. You can also prevent the crawling of unwanted pages using the robots.txt file and thus prevent the wastage of crawl budget. Google loves fast loading, high quality, well optimized, and user friendly websites. They are indexed faster and rank higher.

Suggested Reading

How to fix crawled currently not indexed